시작하기 전에

- 실습에서는 정해진 기간 동안 Google Cloud 프로젝트와 리소스를 만듭니다.

- 실습에는 시간 제한이 있으며 일시중지 기능이 없습니다. 실습을 종료하면 처음부터 다시 시작해야 합니다.

- 화면 왼쪽 상단에서 실습 시작을 클릭하여 시작합니다.

Estimate the amount of data processed by a query

/ 20

Determine slot usage using SQL query

/ 20

Complete a dry run of a query

/ 20

Storing and querying massive datasets can be time consuming and expensive without the right infrastructure. BigQuery is a serverless and fully managed enterprise data warehouse that enables fast and cost-effective queries using the processing power of Google's infrastructure. In BigQuery, storage and compute resources are decoupled, which provides you the flexibility to store and query your data according to your organization's needs and requirements.

BigQuery makes it easy to estimate query resource usage and costs using a variety of tools including the BigQuery query validator in the Google Cloud console, the dry-run flag in the bq command-line tool, the Google Cloud Pricing Calculator, and using the API and client libraries.

In this lab, you use the BigQuery query validator and the bq command-line tool to estimate the amount of data to be processed before running a query. You also use a SQL query and the API to determine resource usage after a query has run successfully.

In this lab, you learn how to:

For each lab, you get a new Google Cloud project and set of resources for a fixed time at no cost.

Sign in to Qwiklabs using an incognito window.

Note the lab's access time (for example, 1:15:00), and make sure you can finish within that time.

There is no pause feature. You can restart if needed, but you have to start at the beginning.

When ready, click Start lab.

Note your lab credentials (Username and Password). You will use them to sign in to the Google Cloud Console.

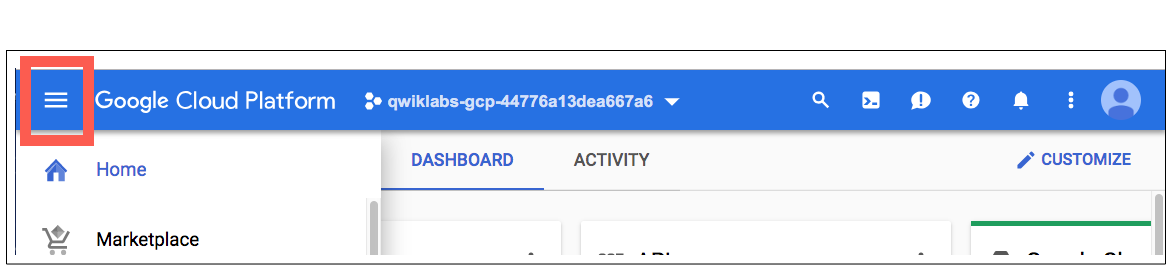

Click Open Google Console.

Click Use another account and copy/paste credentials for this lab into the prompts.

If you use other credentials, you'll receive errors or incur charges.

Accept the terms and skip the recovery resource page.

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is a panel populated with the temporary credentials that you must use for this lab.

Copy the username, and then click Open Google Console. The lab spins up resources, and then opens another tab that shows the Choose an account page.

On the Choose an account page, click Use Another Account. The Sign in page opens.

Paste the username that you copied from the Connection Details panel. Then copy and paste the password.

After a few moments, the Cloud console opens in this tab.

Google Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud.

Google Cloud Shell provides command-line access to your Google Cloud resources.

In Cloud console, on the top right toolbar, click the Open Cloud Shell button.

Click Continue.

It takes a few moments to provision and connect to the environment. When you are connected, you are already authenticated, and the project is set to your PROJECT_ID. For example:

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

Output:

Example output:

Output:

Example output:

When you enter a query in the Google Cloud console, the BigQuery query validator verifies the query syntax and provides an estimate of the number of bytes to be processed by the query.

In this task, you query a public dataset (New York Citi Bikes) maintained by the BigQuery public datasets program. Using this dataset, you learn how to use the query validator to validate a SQL query and to estimate the amount of data to be processed by a query before you run it.

), under Analytics, click BigQuery.

), under Analytics, click BigQuery.The Welcome to BigQuery in the Cloud Console message box opens. This message box provides a link to the quickstart guide and the release notes.

Click Done.

In the SQL Workspace toolbar, click the Editor tab to open the SQL query editor.

When executed, this query returns the count of the station names that contain the text "Broadway" in the column named start_station_name in the citibike_trips table.

BigQuery will automatically run the query validator when you add or modify the code in the query editor.

A green or red check displays above the query editor depending on whether the query is valid or invalid. If the query is valid, the validator also displays the amount of data to be processed if you choose to run the query.

According to the query validator, this query will process 1.06 GB when run.

The query returns the number of records (5,414,611) that contain the text "Broadway" in the column named start_station_name.

Click Check my progress to verify the objective.

BigQuery uses slots (virtual CPUs) to execute SQL queries, and it automatically calculates how many slots each query requires, depending on query size and complexity. After you run a query in the Google Cloud console, you receive both the results and a summary of the amount of resources that were used to execute the query.

In this task, you identify the job ID of the query executed in the previous task and use it in a new SQL query to retrieve additional information about the query job.

For example, the value qwiklabs-gcp-01-5f4dee7a15a3:US.bquxjob_403a14df_185dd37737a begins with the project ID, followed by the location where the job was executed, and ends with the job ID. The syntax for :US. identifies the location where the job was executed.

The project ID is the first part qwiklabs-gcp-01-5f4dee7a15a3 (before :US.), while the job ID is the last part bquxjob_403a14df_185dd37737a (after :US.).

'YOUR_ID' with your job ID (such as 'bquxjob_403a14df_185dd37737a'):When executed, this query returns the slot usage of the query job previously executed on the Citi Bikes public dataset.

The output of this query provides a table that shows the query stages and the associated slot usage for each stage.

Since an individual task in a query is executed by one slot, the sum of values in the column named job_stages.completed_parallel_inputs is the number of total slots used to run the query.

However, after a single slot has completed the first task assigned to it, it can be reassigned to complete another task.

So understanding the total slot time used to run the query (value provided in the column named total_slot_ms) is also important. Specifically, the slot time (in milliseconds, or ms) is provided for the entire query job and for each stage of the query, which represents the amount of slot time used to complete that stage.

For example, a query may complete 150 tasks, but if each task executes quickly, the query may actually use a lower number of slots, such as 100, rather than 150 slots.

Click Check my progress to verify the objective.

You can also retrieve information about a specific query job using the API. In BigQuery, you can use the API directly by making requests to the server, or you can use client libraries in your preferred language: C#, Go, Java, Node.js, PHP, Python, or Ruby.

In this task, you use the Google APIs Explorer to test the BigQuery API and retrieve the slot usage for the query that you ran in a previous task.

In a new Incognito browser tab, navigate to the BigQuery API page for the jobs.get method.

In the Try this method window, input your project ID and job ID that you identified in the previous task.

For example, qwiklabs-gcp-01-5f4dee7a15a3 for the project ID and bquxjob_403a14df_185dd37737a for the job ID.

If asked to confirm your login, select the student username that you used to login to Google Cloud for the previous tasks:

To see the value for completed parallel inputs for the first stage, scroll down to statistics > query > queryPlan > name: S00 > completedParallelInputs.

To see the total slots used for the entire query job, scroll down to the end of the results to review the value for totalSlotMs.

In the bq command-line tool, you can use the --dry_run flag to estimate the number of bytes read by the query before you run the query. You can also use the dryRun parameter when submitting a query job using the API or client libraries. Dry runs of queries do not use query slots, and you are not charged for performing a dry run.

In this task, you learn how to complete a dry run of a query using the bq command-line tool in Cloud Shell.

The output shows you the estimated amount of bytes to be processed by the query before you run the query to retrieve any results.

Now that you know how many bytes will be processed by the query, you have the information needed to decide on your next steps for your workflow.

Click Check my progress to verify the objective.

When you have completed your lab, click End Lab. Google Cloud Skills Boost removes the resources you’ve used and cleans the account for you.

You will be given an opportunity to rate the lab experience. Select the applicable number of stars, type a comment, and then click Submit.

The number of stars indicates the following:

You can close the dialog box if you don't want to provide feedback.

For feedback, suggestions, or corrections, please use the Support tab.

Copyright 2022 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.

현재 이 콘텐츠를 이용할 수 없습니다

이용할 수 있게 되면 이메일로 알려드리겠습니다.

감사합니다

이용할 수 있게 되면 이메일로 알려드리겠습니다.

한 번에 실습 1개만 가능

모든 기존 실습을 종료하고 이 실습을 시작할지 확인하세요.